Table of Contents

Loading table of contents...

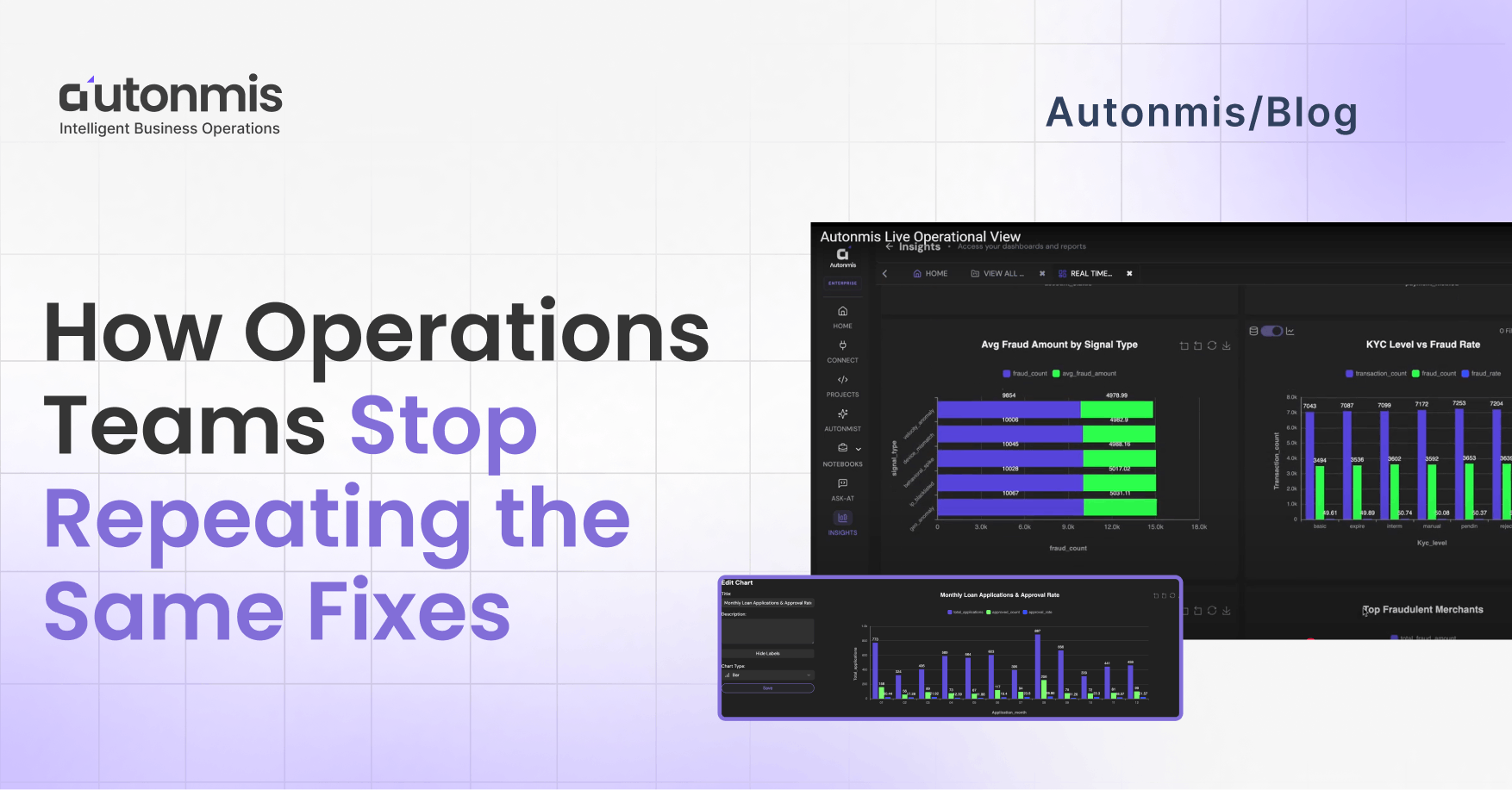

How Operations Teams Stop Repeating the Same Fixes

December 24, 2025

AB

When you run operations, the busy works are obvious. What isn’t obvious is the quiet repeat of the same small failures, the disbursement that stalls a few days every month, the mandate that keeps failing with one partner, the reconciliation that drifts and gets fixed in a hurry. These aren’t dramatic outages. They’re slow leaks. Over time they cost cash, confidence and time.

This article is a practical playbook for Heads of Operations who want to stop the loop. It’s about the way work changes when you stop treating exceptions as one-off tasks and start treating them as owned, measurable signals your organization must remember.

Why it keeps happening: two simple reasons

1. You notice too late.

Something odd happens, but you only see it after customers complain or the MIS shows a backlog. By then the problem has multiplied.

2. You forget what you fixed.

Someone applies a temporary patch, marks a ticket resolved, and the record of what they did - and whether it actually worked; disappears. So the same investigation happens again next month.

Detection is necessary, but it’s not enough. You need a way to notice earlier and make fixes stick.

A five-step operating pattern that prevents repeats

Below is a practical rubric you can use immediately. Each step is tactical and measurable.

1) Capture failures in one place

Start by making discovery automatic. Streams of events live across LOS, LMS, payment gateways, bank files and more. The aim isn’t to ingest everything at once, it’s to capture the event types that show early drift (presentation failures, retries, status mismatches). When those events are pulled into a single operational layer, exceptions can be surfaced as structured objects (what failed, when, in which workflow stage). This is an operational baseline, not a project.

Measure: % of exceptions discovered by automated checks vs by tickets/complaints.

2) Group similar cases together

When you can view similar failures together (by tag, failure class or downstream impact), you stop reacting case-by-case. That’s the shift from firefighting to problem ownership: dozens of “stuck disbursements” may actually be one recurring bank-integration issue. Grouping reveals whether to fix a process, harden a contract, or change a routing rule. In short: see patterns, not noise.

Measure: # of distinct root-cause classes vs total exception volume.

3) Assign ownership and keep it beyond closure

A one-off fix is not prevention. Convert grouped exceptions into tracked queues with a named owner, SLAs, and escalation paths. Require validation before you call the job done: did the fix remove recurrence over a defined observation window? Ownership must persist until the causal chain is addressed, not end when someone clicks “resolved.” Doing this makes fixes measurable and repeatable.

Measure: % of closures with post-resolution verification and recurrence within 30/60/90 days.

4) Catch the first signal

Lead indicators exist - missing events, retries, or state mismatches are often the first sign that a larger failure is forming. Instrument the right places so you see drift before it cascades. Early detection reduces the cost of intervention by an order of magnitude. This is the difference between a short remedial script and an emergency incident response.

Measure: Median time from first anomalous signal to created exception (TTD).

5) Record the fix and watch for regressions

When the work is done, record: what failed, what changed, who acted and how success was validated. Keep automated checks that will trigger if the pattern reappears. This is how a one-off becomes a permanent fix: the system holds the evidence and watches for regressions. That audit trail also makes leadership conversations factual instead of anecdotal.

Measure: Recurrence rate for the same failure class after remediation.

Checkout: How Ops Intelligence Reduces NPAs: Metrics That Matter

A short example

A mid-size lender had disbursements stuck with one bank every 4–6 weeks. The old flow: someone pulls LOS rows, gateway logs and bank files, matches IDs, patches the record, and marks the ticket resolved. Then it happens again.

Do this instead:

- Ingest the minimal event streams (LOS statuses, disbursement logs, bank acks).

- Surface “stuck disbursement” exceptions and group by bank and error code.

- Assign a prevention owner with a 30-day verification SLA.

- Add an early-warning rule: if mandate presentation or ack% for that bank falls below X, create a priority exception.

Result: fewer silent failures, shorter detection time, and a clear drop in recurrence. The team moved from “find-and-fix” to “monitor-and-prevent.”

The handful of KPIs that matter

Don’t drown in charts. Track these five numbers and you’ll know if work is improving:

- Time to Detect (TTD): median time from first anomalous event to exception creation

- Time to Resolve (TTR): median time from exception creation to validated closure

- Recurrence Rate: % of same-class failures reappearing in 30/60/90 days

- Ownership Coverage: % of exceptions with a named prevention owner and post-fix evidence

- Operational Leakage: estimated monthly value at risk from unresolved exceptions

These metrics link ops work to money and risk, the language leadership understands.

How to start this quarter

Do this:

- Pick one workflow that matters (disbursements, mandates, early collections). Keep it narrow.

- Stream the minimal events needed to detect drift (status changes, retries, bank acks).

- Define 4–6 failure classes and a simple grouping rule. Keep the taxonomy small.

- Assign an owner, set a short verification SLA, and require evidence.

- Measure TTD, TTR and recurrence. Use these to prioritise the next fix.

Don’t do this:

- Don’t try to fix every workflow at once.

- Don’t measure vanity metrics.

- Don’t assume a single alert fixes culture - the work is practice plus clear ownership.

Where Autonmis fits into this way of working

This operating pattern didn’t come from theory. It came from watching operations teams struggle with the same gaps again and again: late discovery, unclear ownership, and fixes that don’t stick.

Autonmis was built to support this exact way of running ops.

It sits across existing systems -- databases, operational tools, partner feeds - and keeps the operational signals in sync. Instead of waiting for reports or tickets, teams see exceptions as they form, with context about where they sit in the workflow and why they matter. Grouping, ownership, SLAs, and verification aren’t afterthoughts; they’re part of how work gets done day to day.

The goal isn’t more dashboards or more alerts.

It’s shorter discovery time, clearer ownership, and fewer repeat failures.

If you’re already thinking in terms of early signals, prevention ownership, and institutional memory, Autonmis simply gives you a way to run that model consistently, without adding more manual work.

Checkout: How a COO Uses Autonmis for Predictable Operations

Final note

Fixing things faster helps. Stopping the same thing from needing a fix at all is where the real value is. It takes steady habits: notice earlier, own properly, and keep the record. It’s not glamorous. But it’s the work that makes a company predictable and trusted.

If helpful, I can turn this into a one-page checklist for a 30-day ops sprint or a short owner’s playbook you can hand to team leads.

Recommended Blogs

2/10/2026

AB

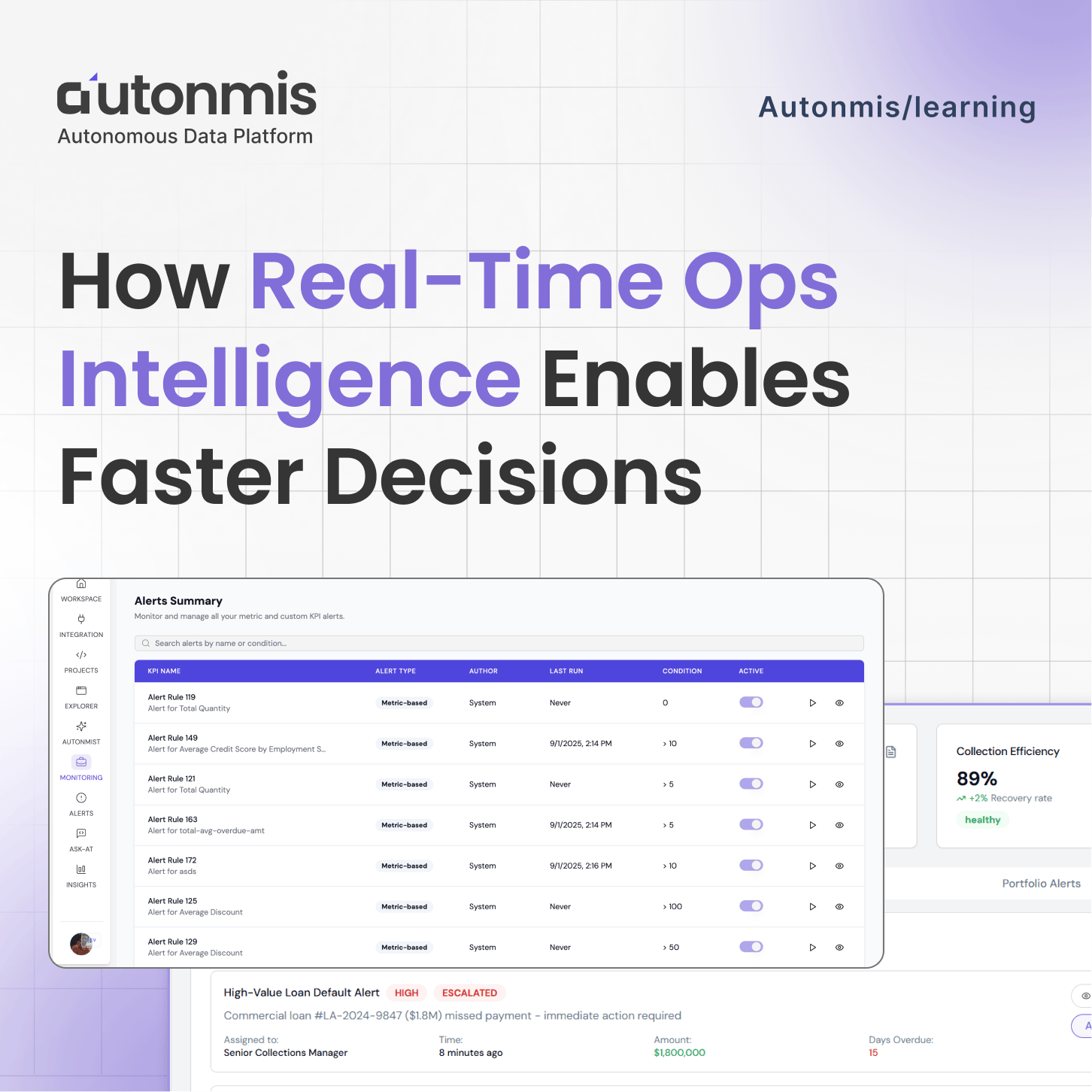

How Real-Time Ops Intelligence Enables Faster Decisions

12/1/2025

AB

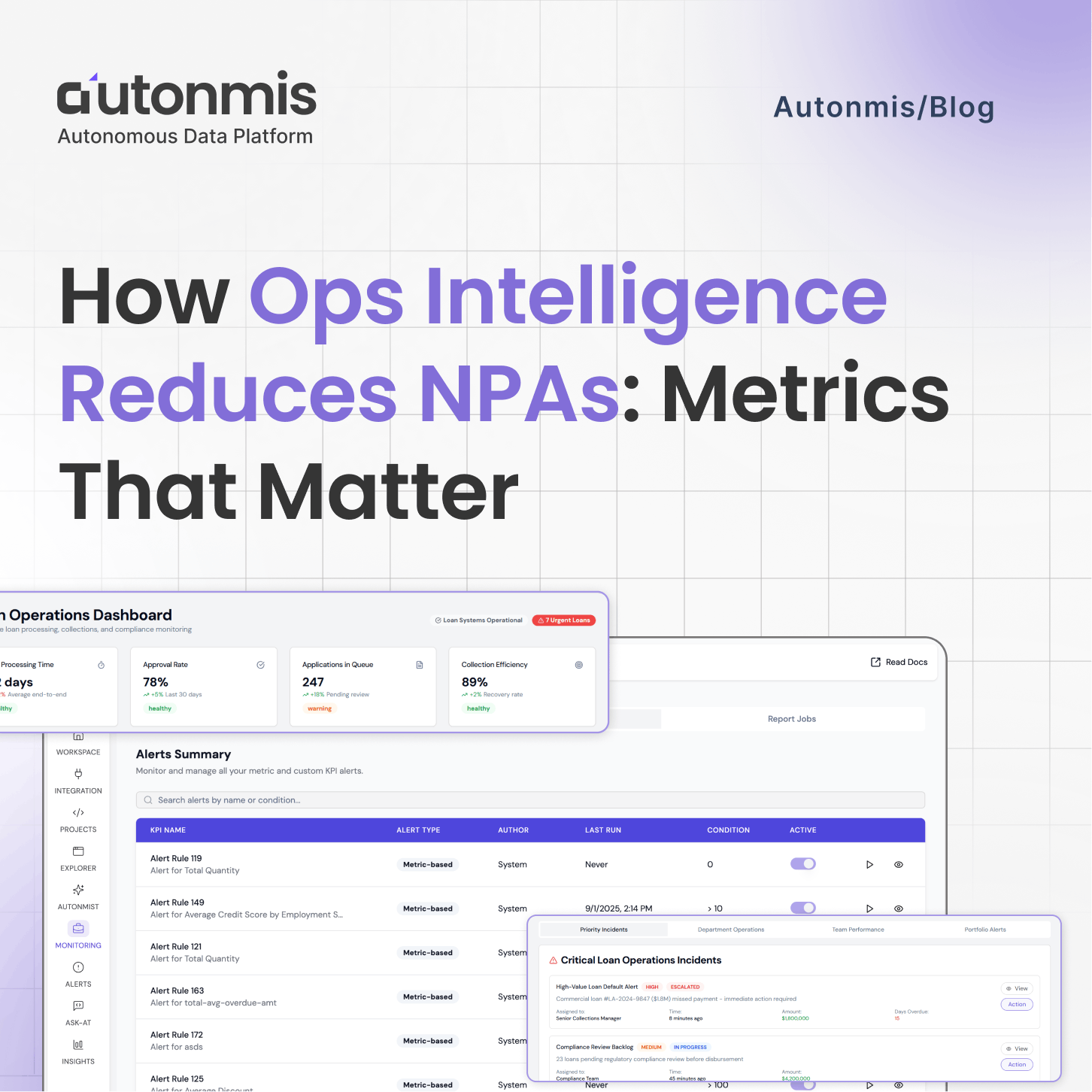

How Ops Intelligence Reduces NPAs: Metrics That Matter

Actionable Operations Excellence

Autonmis helps modern teams own their entire operations and data workflow — fast, simple, and cost-effective.