Table of Contents

Loading table of contents...

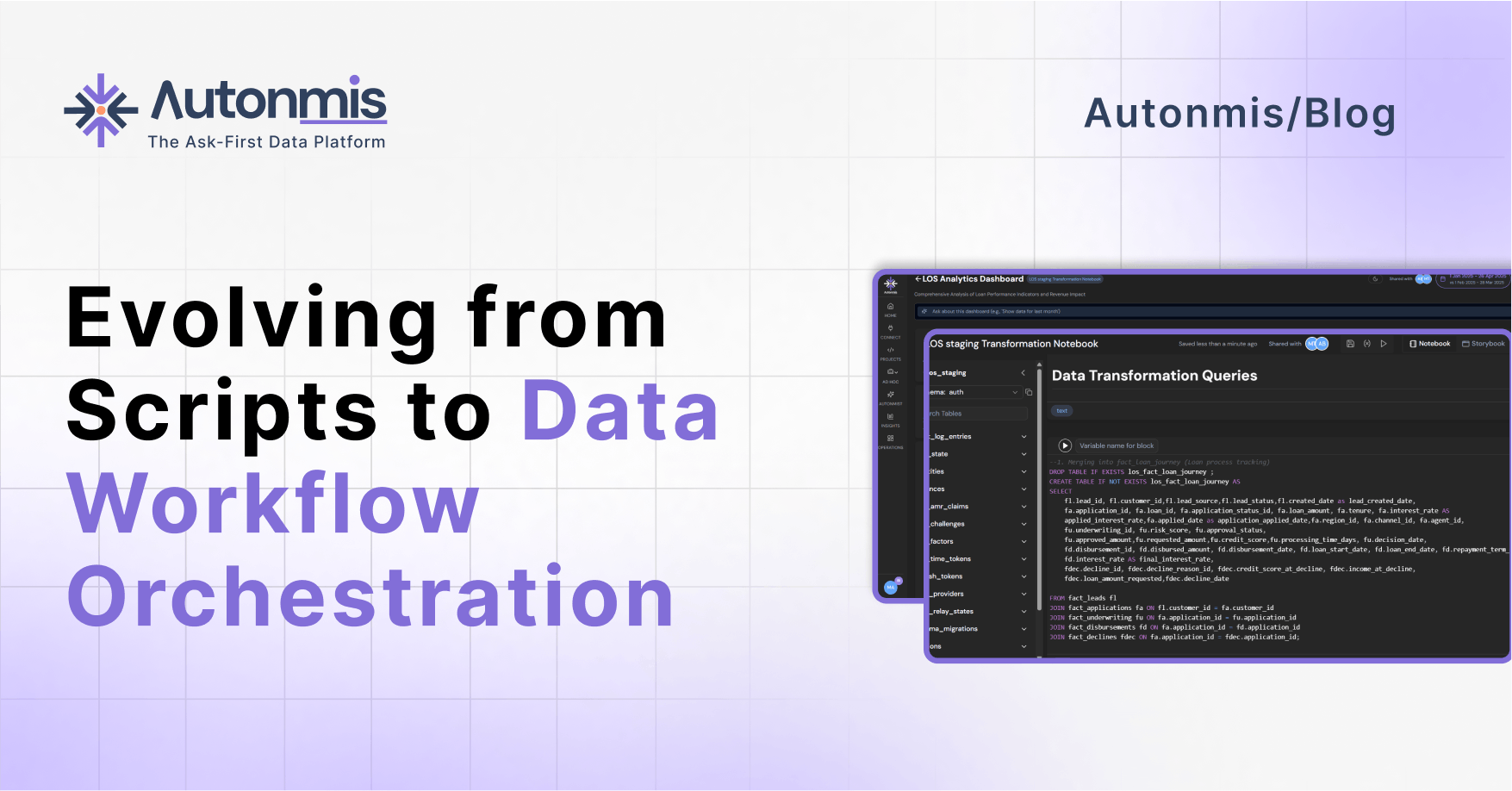

Evolving from Scripts to Data Workflow Orchestration

Discover how growing organizations can evolve their data workflow management from basic scripts to production-grade systems. Learn essential patterns and practical considerations for building orchestration capabilities that balance sophistication with maintainability.

November 25, 2024

AB

A Journey from Scripts to Production Systems

For growing organizations, the evolution of data workflow management is a journey that mirrors their own growth. What starts as a few simple scheduled scripts often needs to evolve into a robust orchestration system. Let's explore this journey of scripts to data workflow orchestration through real-world scenarios and practical patterns.

Understanding the Data Orchestration Journey

The Growing Pains

Consider a typical mid-sized e-commerce company's data needs:

- Daily sales reports need to combine data from multiple systems

- Customer segmentation must be updated weekly

- Inventory forecasts need to be refreshed every six hours

- Marketing campaign performance requires near real-time updates

Initially, these needs are met with simple solutions. But as the business grows, several challenges emerge:

- Dependency Nightmares Marketing can't run their analysis until sales data is processed Sales forecasts need both historical data and current inventory levels Customer segments need updated purchase history and behavior data

- Resource Conflicts Multiple reports trying to access the same database simultaneously Heavy transformations slowing down operational systems Competing processes causing server overload

- Reliability Issues Failed jobs going unnoticed Incomplete data causing downstream problems No clear way to recover from failures

The Evolution of Data Workflow Management

Stage 1: The Script Era

Most organizations start here, with a collection of scheduled scripts. It's simple but problematic:

Common Setup:

- Cron jobs running Python scripts

- Basic logging to files

- Email alerts for failures

- Manual dependency management

Why It Breaks:

- No visibility into running jobs

- Dependencies managed through timing

- Manual intervention needed for failures

- No way to handle partial successes

- Resource conflicts are common

Stage 2: Basic Orchestration

Organizations then typically move to basic orchestration tools, bringing some structure to their workflows:

Key Improvements:

- Centralized Scheduling All jobs managed from one place Basic dependency mapping Simple retry mechanisms

- Better Monitoring Centralized logging Basic alerting Job status tracking

Continuing Challenges:

- Limited scaling capabilities

- Basic error handling

- Manual recovery processes

- Resource management still manual

Stage 3: Production-Grade Orchestration

This is where modern workflow orchestration systems come in, offering comprehensive solutions to complex workflow needs.

Core Components of Modern Data Workflow Orchestration

1. Data Workflow Definition and Data Management

Key Concepts:

- Declarative Workflows: Define what needs to happen, not how

- Dynamic Dependencies: Dependencies based on conditions and data

- Reusable Components: Building blocks that can be shared across workflows

Real-World Example: A retail company's daily sales analysis workflow:

- Wait for point-of-sales data to be available

- Process returns and adjustments

- Calculate store-level metrics

- Update regional dashboards

- Generate exception reports

2. Intelligent Scheduling

Key Features:

- Event-Based Triggers: Start workflows based on data availability

- Resource-Aware Scheduling: Consider system capacity

- Priority Management: Handle competing workflow needs

- Time-Window Management: Ensure business SLAs are met

Practical Application: Consider a financial services company processing transactions:

- High-priority fraud detection workflows

- Medium-priority daily reconciliation

- Low-priority analytical workflows

- All competing for same resources

3. State Management and Recovery

Critical Aspects:

- Checkpointing: Track progress within workflows

- State Persistence: Maintain workflow state across system restarts

- Recovery Mechanisms: Handle various failure scenarios

- Partial Completion: Deal with partially successful workflows

Example Scenario: Processing customer data across regions:

- Some regions complete successfully

- Others fail due to data issues

- System needs to: Retain successful processing Retry failed regions Maintain data consistency

4. Resource Management

Key Capabilities:

- Resource Pooling: Manage shared resources effectively

- Concurrency Control: Prevent resource overflow

- Load Balancing: Distribute work evenly

- Queue Management: Handle backlog effectively

5. Monitoring and Observability

Essential Elements:

- Real-Time Status: Current state of all workflows

- Historical Analysis: Past performance metrics

- Predictive Insights: Potential issues and bottlenecks

- Business Impact Tracking: Effect on business KPIs

Best Practices for Data Workflow Orchestration

1. Start with Clear Workflow Documentation

Before implementation, document:

- Business processes being automated

- Dependencies between processes

- Required resources and constraints

- Expected outputs and consumers

- SLAs and timing requirements

2. Build Progressive Monitoring

Layer your monitoring approach:

- Basic Execution Tracking Job status Success/failure rates Duration metrics

- Resource Utilization System resource usage Database load Network utilization

- Business Impact Data freshness Processing delays Quality metrics

3. Plan for Failure

Design recovery mechanisms:

- Automated retry strategies

- Manual intervention points

- Rollback procedures

- Data consistency checks

Advanced Patterns in Data Workflow

1. Dynamic Workflow Generation

Workflows that adapt to conditions:

- Data volume-based processing strategies

- Quality-based validation paths

- Resource availability-based routing

2. Hybrid Processing Models

Combining different processing patterns:

- Batch processing for historical data

- Micro-batch for recent data

- Real-time for critical updates

Conclusion: The Path Forward

Building a robust workflow orchestration system is an iterative journey. Key principles to follow:

- Start Simple but Plan for Complexity Begin with clear workflow definitions Add sophistication based on actual needs Keep monitoring and alerting as priorities

- Focus on Reliability Make failure handling a first-class citizen Build comprehensive monitoring Plan for recovery scenarios

- Enable Growth Design for scalability Build reusable components Document extensively

Remember: The goal isn't to build the most advanced system possible, but to create one that reliably meets your organization's needs while being maintainable and scalable.

Your orchestration system should grow with your organization, adding complexity only when needed and always in service of clear business objectives.

Modern Orchestration Made Simple

Growing organizations need orchestration capabilities that balance power with simplicity. A modern approach with Autonmis delivers:

- Low-code workflow builders with AI assistance for rapid pipeline development

- Flexible notebook environments that combine SQL and Python for custom transformations

- Built-in monitoring and alerting with automatic error handling and retries

- Smart scheduling that optimizes resource usage and manages dependencies automatically

Ready to Scale Your Data Operations?

Start with a proven platform that combines enterprise capabilities with startup agility. Schedule a demo to see how Autonmis can simplify your data management.

Recommended Blogs

2/10/2026

AB

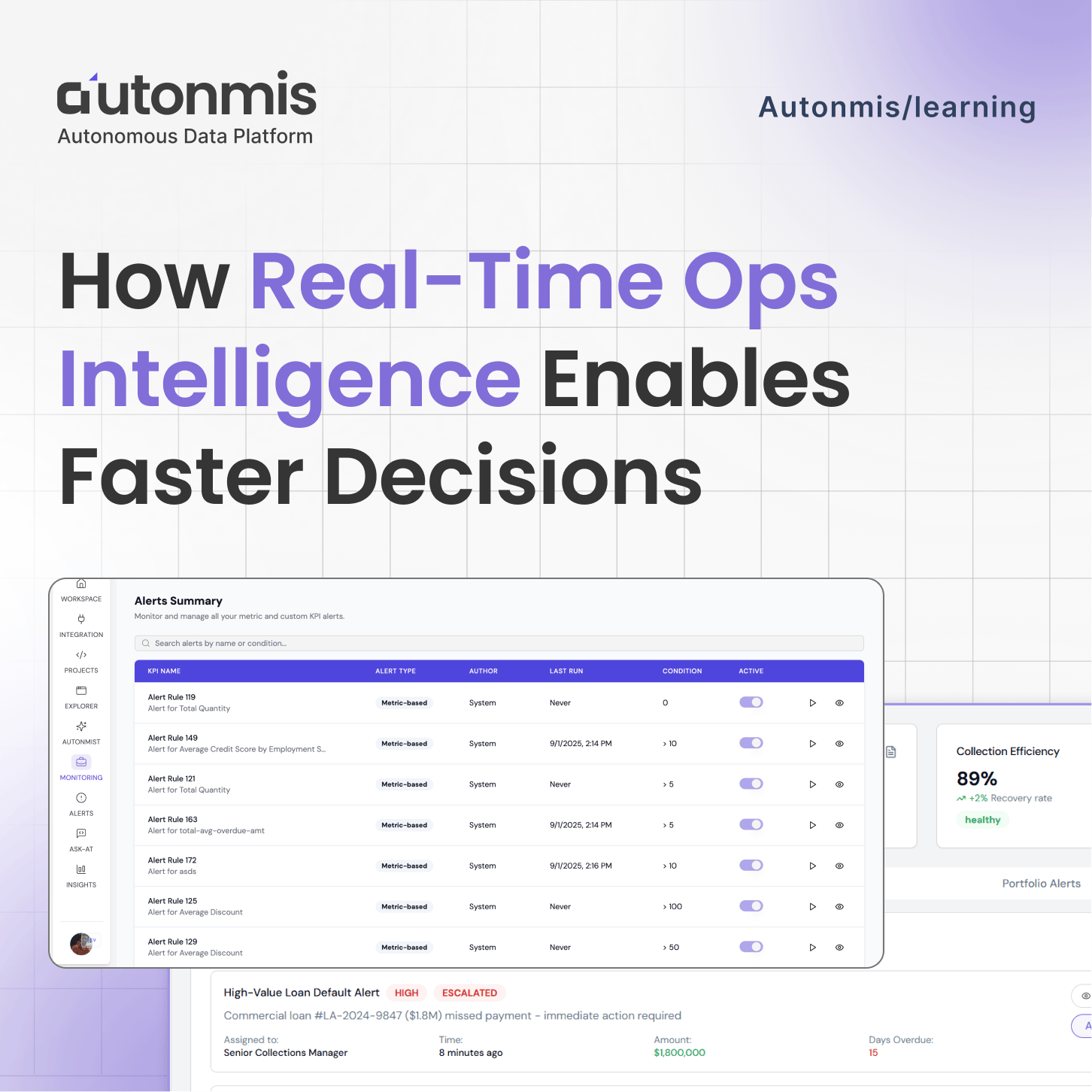

How Real-Time Ops Intelligence Enables Faster Decisions

12/24/2025

AB

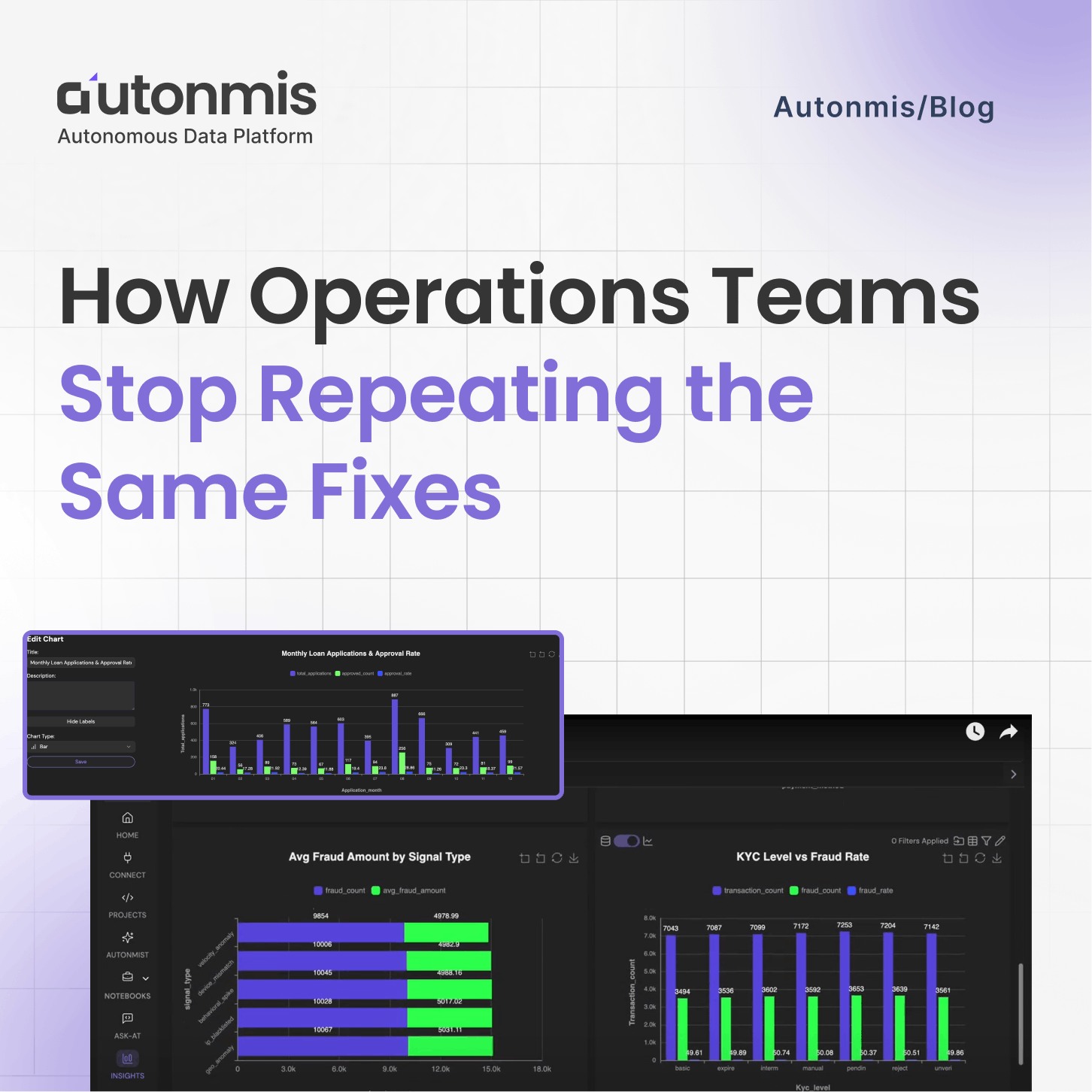

How Operations Teams Stop Repeating the Same Fixes

Actionable Operations Excellence

Autonmis helps modern teams own their entire operations and data workflow — fast, simple, and cost-effective.