6/20/2025

AB

How AI is Transforming the Role of Data Engineer

AI is transforming the role of data engineers by shifting their focus from reactive pipeline maintenance to designing intelligent, low-code, AI-assisted workflows. The most successful teams start with AI-powered monitoring and evolve into predictive quality and scalable orchestration—without overengineering from day one.

AI is Transforming the Role of Data Engineer, and the shift is far more fundamental than many realize. Data engineering is undergoing its most dramatic change since the rise of the cloud. AI agents aren’t just automating pipelines - they’re redefining what it means to build, monitor, and scale modern data systems. If you’ve ever written a late‐night ETL script or wrestled with alert floods, this is the transformation you’ve been waiting for.

A New Playbook for Data Teams

AI is Transforming Data Engineering by replacing hand‑crafted cron jobs and down‑the‑stack debugging with intuitive, low‑code interfaces. Today’s AI-native platforms scaffold everything from SQL notebooks to deployment workflows - often without needing complex DevOps expertise. Analysts get drag‑and‑drop dashboards; engineers get low‑code automation tools that streamline the entire data lifecycle.

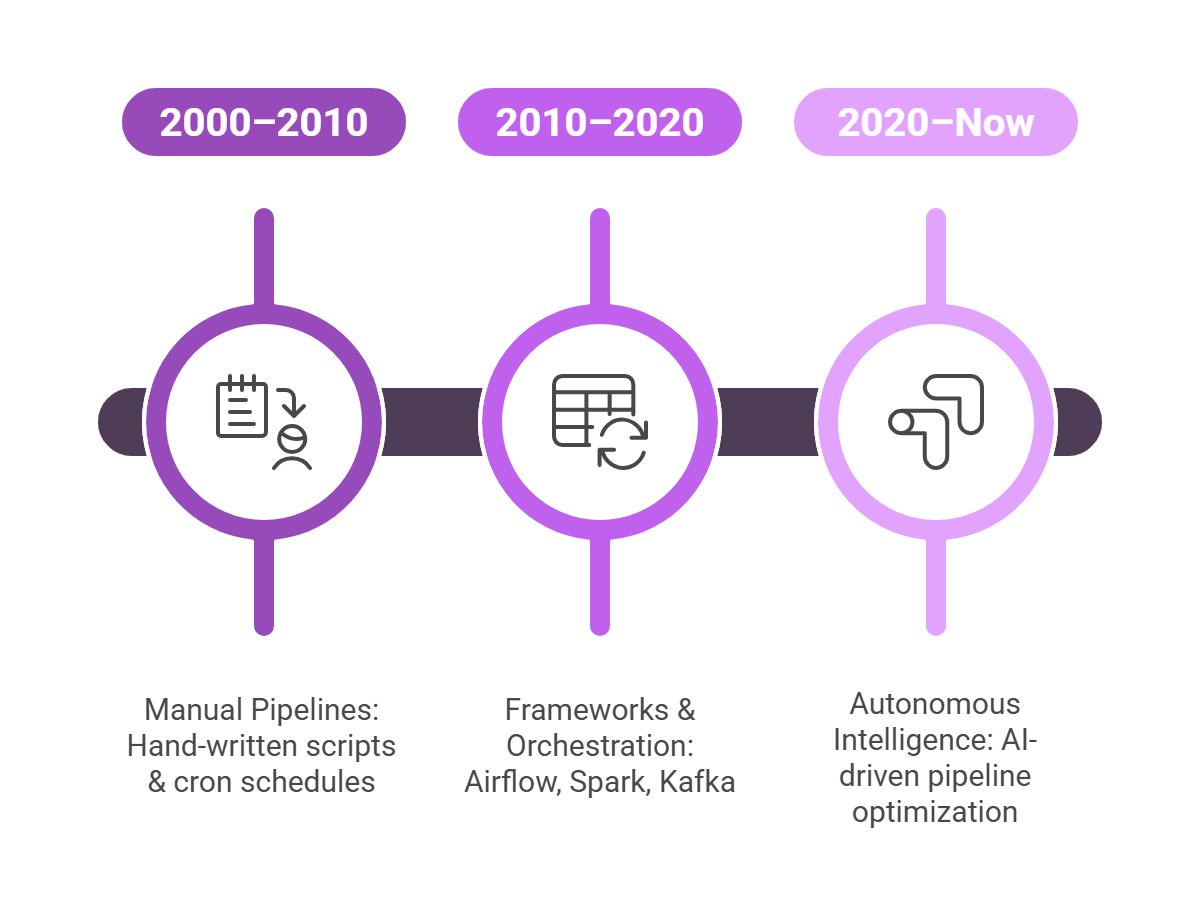

Three Generations of Change

Manual Pipelines (2000–2010)

- Hand‑written scripts & cron schedules

- Reactive data‑quality fixes

- Incident resolution measured in days

Frameworks & Orchestration (2010–2020)

- Airflow, Spark, Kafka

- Infrastructure‑as‑Code

- Proactive monitoring & alerts

Autonomous Intelligence (2020–Now)

- AI‑driven pipeline optimization

- Predictive data‑quality checks

- Integrated visual pipeline scheduling

AI is Transforming the Role of Data Engineer by making this third wave of automation more accessible and intelligent than ever. Most teams are only halfway there - those who go all-in find weeks of work reduced to days, with fewer surprises along the way.

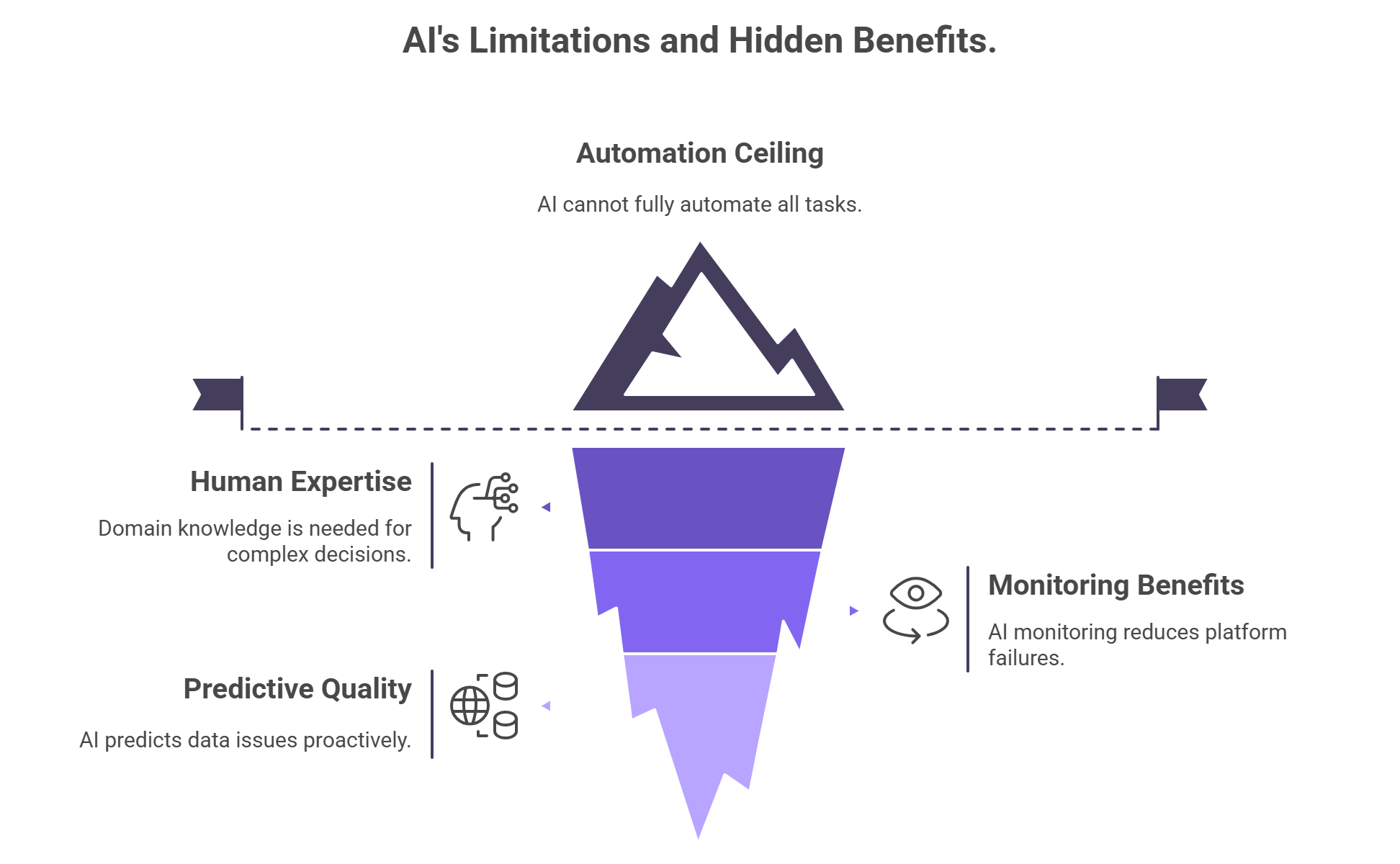

Reality Check: Where AI Helps (and Where It Doesn’t)

70% Automation Ceiling: While AI for Data Engineers excels at repetitive tasks, such as data format conversions, routine health checks, or simple enrichment - about 30% of logic remains too nuanced for automation. Human judgment still drives schema design, edge-case debugging, and domain-sensitive decisions.

Monitoring First for Rapid Wins: Instead of diving headfirst into full automation, teams starting with AI-powered monitoring get instant visibility into system health, drift, and anomalies. These early adopters report a 40% drop in platform failures - freeing engineers to focus on high-value work.

Predictive Quality for Proactive Reliability: Traditional pipelines wait for errors to surface, often at the worst time. Predictive checks flag issues like schema drift or null spikes before they impact production. While they initially uncover more hidden flaws, incident rates drop by nearly 85% once resolved.

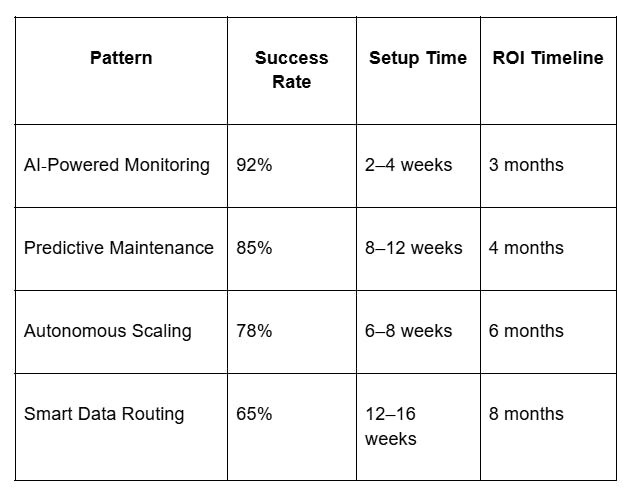

The Tech Patterns Winning in Production

Teams that embrace AI-powered monitoring and anomaly detection as a starting point reduce failure rates significantly, rather than rushing into end-to-end autonomy.

Shifting Skill Sets for 2025

Still Essential:

- SQL (AI can generate ~80% of common queries)

- Python/Scala for customization

- Cloud‑platform expertise for deployment and scaling

Must‑Have New Skills:

- AI/ML Ops: Model versioning, pipeline orchestration, drift detection

- Prompt Engineering for Data: Writing effective prompts for structured analysis and building trustworthy human-AI workflows

- AI Infrastructure Design: Managing vector DBs, real-time inference, and hybrid batch/stream environments

As AI is Transforming the Data Engineer role, engineers are being redefined as human-AI orchestrators - designing workflows where automation assists, but doesn’t replace, decision-making.

A Real‑World Win: Netflix’s Journey

Publicly available case studies show that AI-native workflows dramatically improve efficiency. Incident resolution times drop by up to 50%, and debugging workloads are cut by 70%. The real win isn’t replacing engineers - it’s freeing them to design architecture instead of firefighting issues.

Beware the Pitfalls

- Overconfidence: AI that’s wrong with high confidence is more dangerous than broken pipelines. Always use thresholds and escalation policies.

- Black‑Box Decisions: Maintain glass-box transparency so AI-driven actions are traceable and auditable.

- Skill Atrophy: Schedule "manual mode" debugging drills to preserve your team's analytical depth.

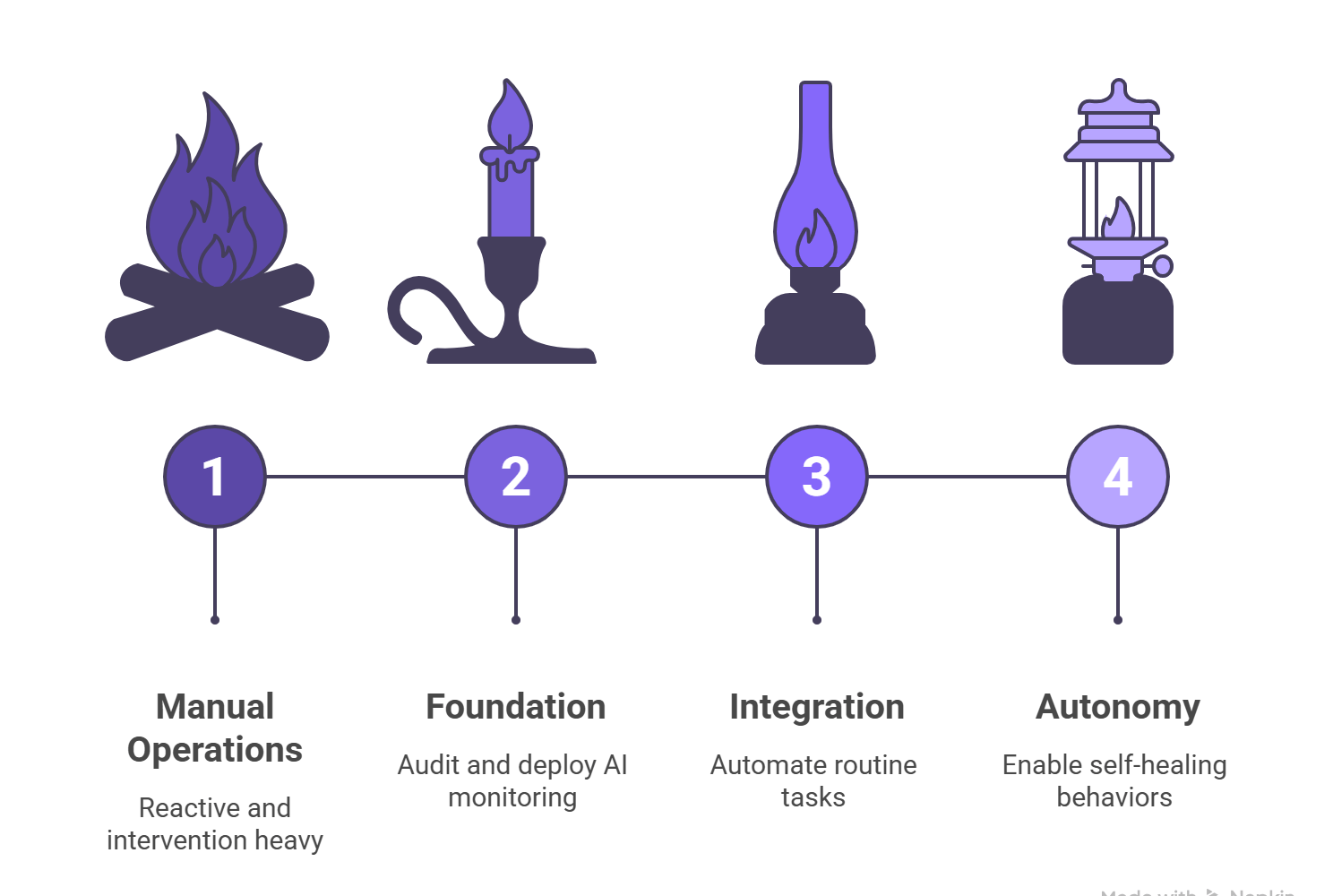

Get Started: A Three‑Phase Roadmap

Phase 1: Foundation (0–3 months)

- Audit existing workflows and baseline MTTR

- Deploy AI-powered monitoring and anomaly detection

- Run pilots with clear success metrics

Phase 2: Integration (4–9 months)

- Automate routine data-quality checks and capacity alerts

- Operationalize pipelines using visual scheduling and low-code orchestration

- Target 30% fewer interventions, 50% faster detections

Phase 3: Autonomy (10–18 months)

- Enable self-healing behaviors and AI-assisted capacity planning

- Achieve up to 70% reduction in operational overhead

FAQs

Q6. Can AI completely replace data engineers?

No, AI augments rather than replaces data engineers by handling routine tasks while humans provide context, creativity, and strategic decision-making that AI cannot replicate.

Q5. How do I prove ROI from AI in data engineering to leadership?

Track metrics like reduction in manual tasks, MTTR (mean time to resolution), pipeline uptime, and time-to-insight. Early wins from AI-powered monitoring and anomaly detection can justify further investment.

Q6. How does AI reduce data quality issues?

AI enables predictive data-quality by detecting anomalies, schema drifts, and null spikes before they impact production - resulting in up to 85% fewer downstream incidents.

Q7. What platform helps implement AI-driven workflows without DevOps complexity?

Autonmis is purpose-built for teams that want to integrate AI-driven analytics and data workflows without heavy DevOps involvement. With its no-code/low-code interface, visual workflow graphs, Python and SQL notebooks, and built-in AI assistant for notebook scaffolding, Autonmis enables both analysts and data engineers to collaborate, schedule, and automate pipelines—without needing to rewrite infrastructure or maintain custom orchestration scripts.

Powered by the Next Generation of Data Engineering

Autonmis is purpose-built for teams entering the AI-native era of data engineering. With AI-assisted notebooks, low-code pipeline scheduling, anomaly detection, and a visual console that bridges analysts and engineers, it lets you start small - monitor first, automate next—and scale intelligently without over-engineering.

Want to orchestrate smarter workflows without the DevOps drag?

Explore Autonmis

Recommended Learning Articles

2/6/2026

AB

K-Means Clustering for Anomaly Detection in Business Operations

1/15/2026

AB

The Architecture Behind Real-Time Ops Intelligence: RAG + NL2SQL Explained

Actionable Operations Excellence

Autonmis helps modern teams own their entire operations and data workflow — fast, simple, and cost-effective.